AI Frontend Generator Comparison: Claude Code vs v0 vs Cursor vs Replit

Photo by Luke Jones on Unsplash

AI Frontend Generator tools are experiencing a significant surge in popularity and adoption across the industry, with what appears to be multiple new tools being released and announced each week. It seems that every major WYSIWYG Platform provider is now developing and launching their own proprietary solution in this space.

With the recent surge in attention around Claude Code and similar AI-powered development tools, now is the perfect time to examine these platforms in depth and understand how they truly compare.

I have taken the time to thoroughly examine and test several of these tools, and I want to share my detailed insights and findings with you.

Hypothesis

I am working under the assumptions outlined in my post The AI Future of Frontend Development. I want to understand in how far the Frontend Development domain becomes obsolete over time.

My hypothesis is that from design, building a design system to the production code frontend, all could be done using AI in the future — obviously guided by experts.

As more developers explore the potential of AI and incorporate it into their workflows, we will undoubtedly see a range of new innovations that will continue to shape the industry.

Well, here we are.

With more tools on the rise that are able to provide user interfaces, that look well, perform great and are accessible, it is more likely that designers, business people and engineers jump to use these tools quickly without considering another engineer to build interfaces for them.

This represents a fundamental shift in software development—what Andrej Karpathy recently termed "vibe coding". Instead of manually writing code, developers describe their project to an AI, which generates code based on the prompt. The developer then evaluates results and requests improvements without manually reviewing the code. This approach emphasizes iterative experimentation over traditional code structure, allowing amateur programmers to produce software without extensive training, though it raises questions about understanding and accountability.

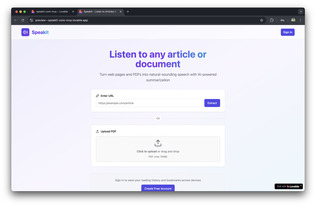

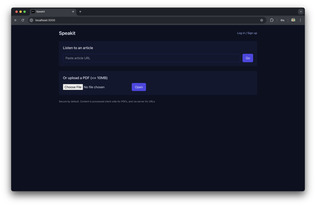

This experiment focusses purely on Web. I am using a greenfield project and am not looking into complex long-term projects and maintainability. This is a test with one person, not looking at collaborative features.

Structured approach

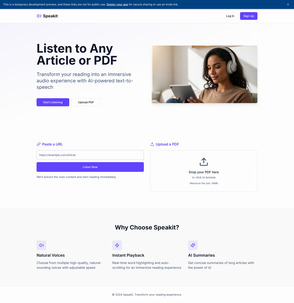

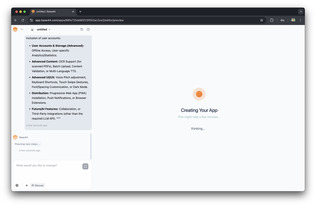

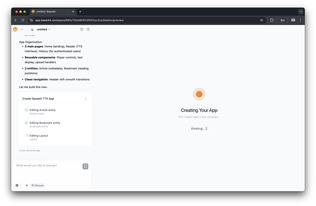

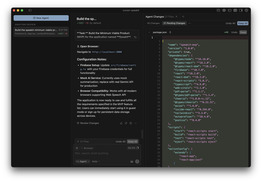

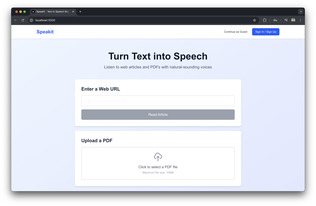

For this experiment, I used a structured approach. I generated a list of features for a greenfield project that I wanted the tools to build.

I then fed each tool the same prompt along with the feature list.

I generated this feature list using Google's Gemini AI, asking it to create a markdown file that works well with LLMs.

Here is the feature list: speakit-feature-list.md

Here is the prompt I used:

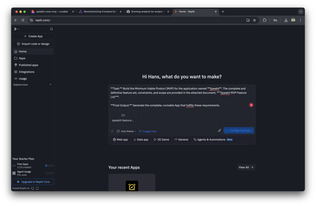

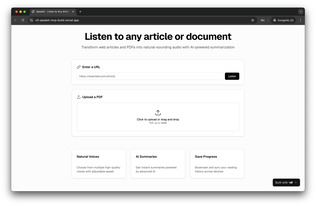

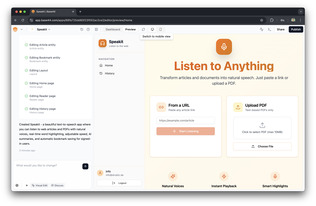

Task: Build the Minimum Viable Product (MVP) for the application named Speakit. The complete and definitive feature set, constraints, and scope are provided in the attached document, "Speakit MVP Feature List".

Final Output: Generate the complete, runnable App that fulfills these requirements.

I intentionally did not assign a role to the LLM—I believe the tools should handle this themselves.

After the initial generation, I re-iterated and asked each tool to verify that all features were implemented.

Task: Go through the list of features one more time thoroughly to verify that the app is feature complete. Implement what is missing.

I then compared the code using the following criteria:

Objective Metrics

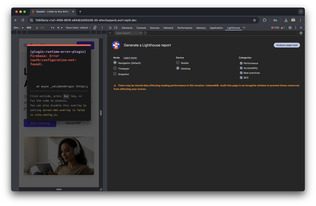

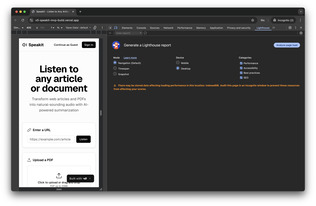

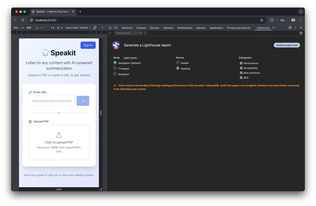

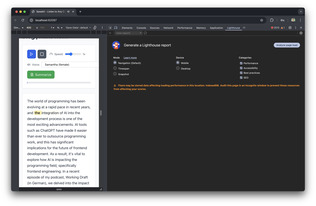

- Performance: Did the application perform well under modern standards on Mobile and Desktop. Using Lighthouse scores, local machine

- Code Quality: Maintainability, readability, and adherence to best practices. Measure using: Maintainability Index, code complexity analysis (cyclomatic complexity), Lines of Code, and manual code review

- Export/Portability: Can you extract clean code and deploy elsewhere? Measure: Ability to run exported code without modifications, dependency management

- Cost: Pricing models and value for money. Measure: Cost per project, feature limits at each tier

- Tech Stack: What frameworks/libraries does it use? Are they modern and well-supported? Measure: Framework versions, community size, maintenance status

- Version Control Integration: Git support and collaboration features. Measure: Native Git support, commit history, branching capabilities

- Accessibility: Was accessibility implement by the tool? Using Lighthouse scores, automated accessibility testing tools

- Error Handling: How well does generated code handle edge cases and errors? Measure: Test coverage, error boundary implementation, validation logic

Subjective Metrics

- Developer Experience: Ease of iteration, debugging capabilities, and learning curve. Measure: User surveys, time to first successful deployment, qualitative assessment

- Iteration Speed: How quickly can you make changes and see results? Measure: Time from prompt to preview, number of iterations needed

Tooling Landscape

Here are the tools I looked at:

- Lovable

- Replit

- Vercel v0

- base44

Additionally I also used two editors to generate:

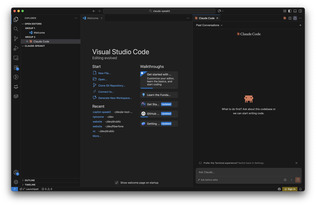

- Cursor Editor

- GitHub Copilot through VS Code

Plus, and that’s mainly due to the late hype: Claude Code

Obviously there are more tools. I left out Locofy.ai, Anima App and Bolt just due to the effort. And others like Framer, Stitch, UIzard or Canva, all more Design-leaning.

Comparison

(scroll horizontally)

| Metric | Lovable | Replit | Vercel v0 | base44 | Cursor | GitHub Copilot | Claude Code |

|---|---|---|---|---|---|---|---|

| Website | lovable.dev | replit.com | v0.dev | base44.com | cursor.com | github.com/copilot | https://www.claude.com/product/claude-code |

| Generated output | https://speakit-core-mvp.lovable.app | https://10609a1a-c1a1-4f69-8816-e84db3d05d38-00-efwvfsaqwnb.worf.replit.dev/ | https://v0-speakit-mvp-build.vercel.app/ | https://speakit-52ac2ce2.base44.app/ | - | - | - |

| Backend Functionality | Yes, on request, Supabase DB, Auth integrated | Yes, connected to Firebase | Yes | Yes, own implementations | Yes, partial implementation | Yes, partial implementation | Yes |

| Performance | Mobile: 98 Desktop: 100 |

Mobile: 54 Desktop: 55 (Dev version) |

Mobile: 92 Desktop: 93 |

Mobile: 84 Desktop: 88 |

Mobile: 84 Desktop: 100 |

Mobile: 98 Desktop: 100 |

Mobile: 98 Desktop: 100 |

|

Code Quality lizard → LOC, Complexity |

NLOC: 4182 AvgCCN: 2.1 Avg.token: 47.2 Fun Cnt: 250 Well organised; Code modern; No Tests included |

NLOC: 5368 AvgCCN: 1.9 Avg.token: 44.3 Fun Cnt: 296 Well organised but somewhat unusual; Code modern; No tests included |

NLOC: 1886 AvgCCN: 2.4 Avg.token: 43.1 Fun Cnt: 117 Well organised; Code modern; No tests included |

- |

NLOC: 1433 AvgCCN: 2.3 Avg.token: 41.3 Fun Cnt: 114 Well organised; Code modern; no tests included |

NLOC: 614 AvgCCN: 3.5 Avg.token: 50.6 Fun Cnt: 54 Well organised; Code modern; no tests included |

NLOC: 614 AvgCCN: 3.5 Avg.token: 50.6 Fun Cnt: 54 Well organised; Code modern; no tests included |

| Export/Portability | Each file individually, Download to GitHub | Each file individually, Download as zip | Each file individually, Download as zip, CLI Download | Download only in $50 Subscription Plan, not even starter | Code is right at your hand | Same as Cursor | Same as Cursor |

| Cost | $25 / month for 100 credits; $50 for 200 | $25 / month + pay as you go for additional usage | $20 / month + pay as you go for additional usage |

$25 / month Backend only with plan for $50 / month |

$20 / month | Free; Next plan $10 for unlimited usage and latest models | $20 / month or Pay as you go via API connect |

| Tech Stack | TypeScript, React, Shadcn UI with Radix UI, Tailwind, Vite | TypeScript, React, Radix UI, Framer Motion, Tailwind, Vite | TypeScript, React, Next.js, Shadcn with Radix UI, Tailwind | - | TypeScript, React, Tailwind | TypeScript, React, Next.js, Tailwind | TypeScript, React, Next.js, Tailwind |

| Version Control Integration | Yes, GitHub, 2-way sync | No, there seems to be a .git folder, but I have not found it | Yes, GitHub, 2-way sync | No, only in $50 Subscription Plan, not even starter | Did not generate .git; manual work | Did not generate .git; manual work | Did not generate .git; manual work |

| Accessibility | 96 | 92 | 100 | 96 | 88 | 95 | 95 |

| Error Handling | No (Inserting 404 pages displays their content; No error message for PDFs >10mb) | Yes | Yes | Yes | Yes | Yes | Yes |

| SEO | 100 | - (Dev mode only) | 100 | 100 | 91 | 100 | 100 |

| Developer Experience | 8/10; Good, Code visible, easy to change code; not too verbose about what is going on | 5/10; A bit hard to find all the right options in the interface, a bit overloaded, Changing code is possible | 9/10; Good, Code visible, easy to change code, easy to change each individual element, verbose about what is going on | 2/10; possible to view configuration of functionality, no real code visible; no possibility for editing | 7/10; Manual work involved, all code visible and easy to change, verbose about what is going on | 7/10; Manual work involved, easy to read codebase, verbose about what is going on; no shiny interface | 7/10; Manual work involved, all code visible and easy to change, verbose about what is going on |

| Iteration Speed |

Initial: 58s; Worked for 5:41m; Verify: 2:29m |

Initial: 2m Worked for 14m |

Worked for 9:46m; Verify: 4:21m |

Worked for 3m; Verify: 1:40m |

Worked for roughly 7m; Verify: 3:47m |

Worked for roughly 16m; Verify: 2m |

Worked for roughly 14m; Verify: roughly 4m |

| Could you publish automatically? | Yes; can set up custom domain | No; publish with Subscription | Yes; can set up custom domain; Integrates with Vercel | Yes; can set up custom domains | No — Editor | No — Editor | No — Editor |

| Additional |

+ Security Scanner, found 1 issue (Missing RLS Protection for

users) + Page speed analysis + Uses own AI gateway to enable AI functionality - Components installed, e.g. "recharts", but not used |

+ Asks to do design first + Made it possible to use app in guest mode without Firebase connection - Verify only possible with Subscription - URL pasting required to submit the form 2 times before it works - No README file - Components installed, e.g. "recharts", but not used |

+ Lets you choose design system + Possibility to let AI enhance your prompt + Design Mode lets you edit components individually (similar to base44) + Built an application that was working without Firebase config (optional) + Fixed occurring Firebase error while verifying - Error when trying to publish the project (fixing it was done with one click) - Very minimalistic landing page |

+ Possible to choose from predefined styles + Visual Edit lets you edit components individually (similar to v0) + Offers Security Scan before publishing + E-Mail Verification for account - No Markdown upload |

+ Verbose output while working - No Markdown upload - Initial build raised error: fs Can't be resolved — fixed it to get any result - Very minimalistic landing page |

+ Verbose output while working - Landing Page just minimal functionality - No Markdown upload - No mention to fill .env file - Worked very long for little result |

- You must have a subscription even if you just want to test - No markdown upload - On the app it was hard to read the text |

| Repository Link | https://github.com/drublic/lovable-speakit-core-mvp | https://github.com/drublic/replit-speakit | https://github.com/drublic/v0-speakit-mvp-build | - | https://github.com/drublic/cursor-speakit | https://github.com/drublic/copilot-speakit | https://github.com/drublic/claude-speakit |

Feature Completeness

(scroll horizontally)

| Feature ID | Feature | Lovable | Replit | Vercel’s v0 | base44 | Cursor | Copilot | Claude |

|---|---|---|---|---|---|---|---|---|

| 1.1 | URL Input | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| 1.2 | PDF Upload (max 10MB, text-based) | Yes | Yes | Yes | Yes, allowed >10mb | Yes | Yes, allowed >10mb | Yes, allowed > 10mb |

| 1.3 | AI Summarization (gemini-2.5-flash) | Yes | Yes | No, there was an error | Yes | No | No (reader page not accessible) | Yes |

| 2.1 | Web Content Extraction | Yes, but also footer and header etc. was extracted. | Yes | Yes | Partially, some pages just did not load | Yes | No (reader page not accessible) | Yes |

| 2.2 | PDF Text Extraction | No, PDF reading did not work as it should, did not find text | No, failed for all tested PDFs | No, failed to extract content | Yes | No, failed for all tested PDFs | No (reader page not accessible) | No, failed for all tested PDFs |

| 3.1 | TTS Voice Selection (min 2 voices) | Yes | Yes | Yes | Yes, significantly less voices than others | Yes, voice selection did not work | No (reader page not accessible) | Yes, just 2 voices, but that’s the spec ;) |

| 3.2 | Playback Controls (Play/Pause/Resume/Stop) | Yes, only 1 word played | Yes | Yes | Sometimes played, sometimes not | Buttons available, but did not work | No (reader page not accessible) | Yes |

| 3.3 | Reading Speed (0.5x to 2.0x) | Yes | Yes | Yes | Yes | Yes | No (reader page not accessible) | Yes |

| 3.4 | Instant Playback | Yes | No | Yes | Sometimes yes, sometimes | Yes | No (reader page not accessible) | Yes |

| 4.1 | Real-Time Word Highlighting | Yes | Yes, after initial voice selection | Yes, but is not accurate | No | Yes | No (reader page not accessible) | Yes |

| 4.2 | Auto-Scrolling | Yes | Yes, after initial voice selection | Yes | No | Yes | No (reader page not accessible) | Yes |

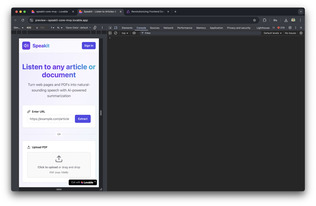

| 5.1 | Landing Page (URL input + PDF upload) | Yes | Yes, inserted AI generated image | Yes | No | Yes | Yes, very limited | Yes |

| 5.2 | Minimal Reader Interface | Yes, but could be more minimalistic | Yes | Yes, but could be more minimalistic | Yes | Yes | No (reader page not accessible) | Yes |

| 5.3 | Progress Bar | No | Yes (X of XXX Words played) | Yes | Yes | Yes | No (reader page not accessible) | Yes |

| 5.4 | Responsive Design (desktop/tablet/mobile) | Partially, Controls were not fully visible | Yes | Yes | Yes | Yes | Yes | Yes |

| 5.5 | Authentication UI (Log In/Sign Up) | Yes | No, Error while calling the Firebase Login | Yes | Yes | Yes | Yes (same page with two buttons) | Yes |

| 6.1 | Guest Mode + Authenticated Accounts (Firebase) | Yes, used own Database | No, Firebase failure | No, failed to create account | No | No, failed to create account | No, failed to create accounts | No, failed to create accounts |

| 6.2 | Data Persistence (session-based for guests, Firestore for authenticated) | Yes, used own Database | No | No | No | No | No | No |

| 6.3 | Security (HTTPS) | Yes | Yes | Yes | Yes | - | - | - |

Insights

Lovable

Easy to use—backend integration is a huge plus. The app worked only partially but looked decent. The core functionality didn't work, which is a major drawback. With iterations, it seems possible to make the app fully functional.

Replit

The "Design First" approach is smart—it lets you iterate on design without full code integration in the background.

I really liked the overall design of the application. It was clean and functional. The errors seem easily solvable, except perhaps the Firebase backend. Here, built-in functionality could be beneficial.

Overall, creating the application took very long—especially compared to other tools.

Vercel’s v0

v0 was thorough in checking that functionality exists and works, though it missed some integration pieces—specifically Summary and Login. These issues could likely be fixed by explicitly pointing them out again, but that shouldn't be necessary for a tool like this. v0 integrates extremely well with the Vercel platform. Not using Firebase might have made it easier for the tool to leverage Supabase, which integrates seamlessly with Vercel.

base44

I added this tool because I see frequent ads for it on YouTube. That was a mistake.

As an engineer, this tool is a nightmare—there's no code access at all. While it has some functionality, it missed critical features like Guest Mode and Bookmarks. The inability to view or publish the generated code makes it a non-starter for me. This also prevented me from running code quality metrics.

The app itself was unusable since content didn't play reliably. Some functionality felt over-engineered. However, it was the only tool that could play some PDFs—though inconsistently. The pricing is steep. I don't recommend it.

Cursor

I have used Cursor extensively for creating applications on my own, well beyond what I tested here—I'm speaking from long-term use.

This editor is developing its own features remarkably fast. What I really love is its planning mode, where you can first iterate on the architecture of an implementation, refine it, and then—once it's ready—either build it yourself or let the tool handle it. For me, this feels like the architectural discussions I would have with a team or co-worker, except you're doing it with an AI that knows your code.

For the given scenario, the page presentation was well executed and looked nice. However, some features weren't implemented well, which caused failures. For an AI that runs in the editor, it did surprisingly well.

GitHub Copilot

This is the result I expected from Cursor as well, to be honest. Copilot seems to excel at other tasks. The landing page was somewhat of a disaster. The functionality didn't come close to meeting the requirements, and the tool wasn't straightforward in telling me what to do with the code.

This tool is built for a different job—it's more of an AI pair-programmer.

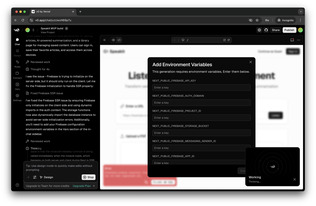

Cursor Code

It's unfortunate you can't test the tool without a subscription. I used the API connection and loaded the account with €5 plus taxes. Generating this app cost me $3.34—a significant amount. I'd definitely recommend the subscription instead.

When testing the app, I was surprised by how accurately the features were executed—except for the failing signup/login. But this seems to be a common issue across most tools.

Though it wasn't fast, this tool comes close to what I'd expect from an agentic code generator.

Limitations

This evaluation has several important limitations that should be considered when interpreting the results:

Testing Timeframe Constraints: This review represents a snapshot in time during October 2025. The testing was conducted over a limited period, which means that some tool features or capabilities may have been missed or not fully explored. Each tool received the same initial prompt and one follow-up iteration, which may not fully represent their capabilities in extended development scenarios, e.g. see Plan mode in Cursor.

Evolving Nature of AI Tools: The AI frontend generation space is rapidly evolving, with frequent updates, new features, and model improvements being released weekly. By the time you read this, some of the tools may have significantly improved or changed their capabilities.

Subjective Nature of Some Assessments: Several metrics in this evaluation, particularly in the "Subjective Metrics" section, rely on personal experience and judgment.

Conclusion

Interestingly, all tools defaulted to the same tech stack—even though it wasn't specified. A common tech stack for web apps appears to be emerging. What does this mean for the engineering community?

Here are all patterns I identified:

Patterns

- All tools defaulted to the same tech stack (React, Vite, Tailwind CSS, Firebase) without being explicitly asked, suggesting an emerging standard for AI-generated web apps

- Authentication and login functionality was a consistent weak point across multiple tools, indicating this remains a challenging area for AI code generation

- Tools fell into distinct categories: productivity enhancers (Copilot, Claude Code), refactoring specialists (Cursor), and prototype generators (v0, Lovable) → see Recommendations below

- Editor-based tools (Cursor, Claude Code) provided more control and transparency but required more technical knowledge, while standalone generators (v0, Lovable) were faster but less flexible

- Cost models varied dramatically—from subscription-based to pay-per-generation—with significant implications for different use cases — $20-25 / months seems to be a good middle ground though

- Code quality and maintainability varied widely, with some tools producing production-ready code while others generated prototypes requiring significant refactoring

- Most tools struggled with complex feature integration and edge cases, requiring multiple iterations or manual intervention

- Visual design quality was surprisingly high across most tools, but functional completeness often lagged behind aesthetic presentation

Recommendations

What is your primary use case?

For boosting day-to-day productivity: If you need completion, chat, and suggestions to enhance your daily workflow → choose Copilot or Claude Code.

For transforming how you build: If you want to refactor legacy code, automate feature branches, generate test suites, or handle cross-module design → choose Cursor.

For building from scratch or prototyping: If you're starting a new app, launching a business, need inspiration, want to modify an existing codebase without deep knowledge, or need rapid prototyping → choose v0.

Wrapup

So -- this is my experience with AI Frontend Generators. What is yours? Did I miss any tools? Did one tool work exceptionally well for you? Let me know on LinkedIn.